Keras Cheat Sheet

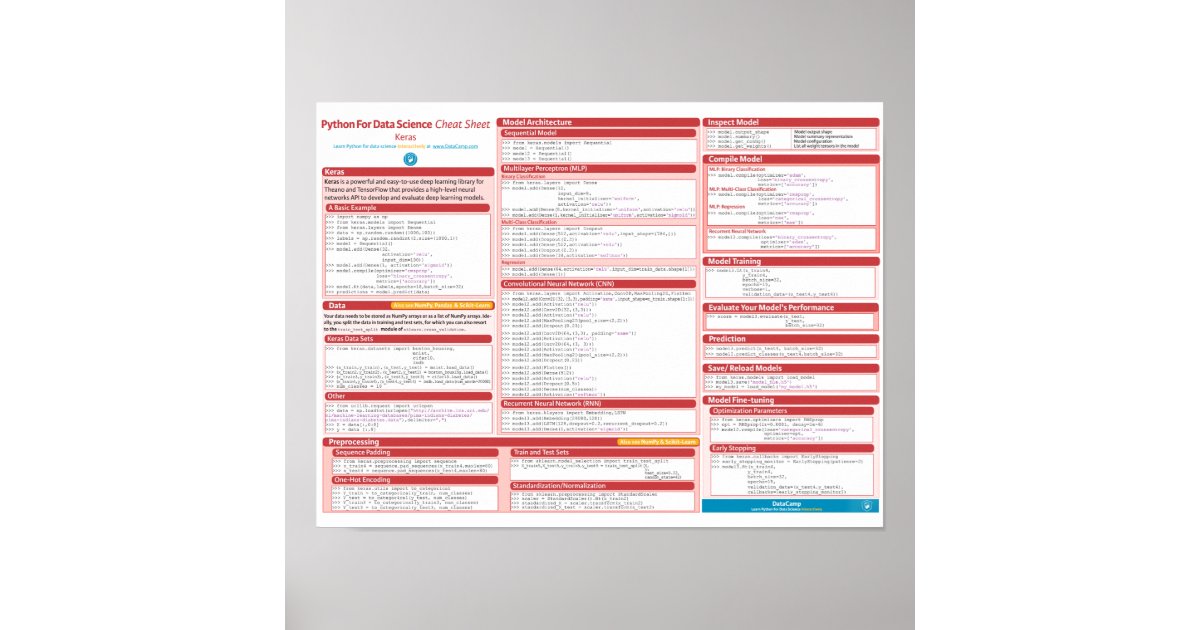

Keras Cheat Sheet: Deep Learning in Python Posted on: Oct 07 - 2017. Deep Learning With Python. Deep learning is a very exciting subfield of machine learning that is a set of algorithms, inspired by the structure and function of the brain. These algorithms are usually called Artificial Neural Networks (ANN). Keras is our recommended library for deep learning in Python, especially for beginners. Its minimalist, modular approach makes it a breeze to get deep neural networks up and running. To see the most up-to-date full tutorial, as well as installation instructions, visit the online tutorial at elitedatascience.com. Keras Cheat Sheet: Neural Networks in Python Make your own neural networks with this Keras cheat sheet to deep learning in Python for beginners, with code samples. Keras is an easy-to-use and powerful library for Theano and TensorFlow that provides a high-level neural networks API to develop and evaluate deep learning models.

- Machine Learning Cheat Sheet Pdf

- Datacamp Cheat Sheets

- Keras Cheat Sheet Pdf

- Keras Cheat Sheet Pdf

- Keras Cheat Sheet 2020

- Keras Cheat Sheet R

TensorFlow was originally a deep learning research project of the Google Brain Team that has since become--by way of collaboration with 50 teams at Google--a new, open source library deployed across the Google ecosystem, including Google Assistant, Google Photos, Gmail, search, and more. With TensorFlow in place, Google is able to apply deep learning across numerous areas using perceptual and language-understanding tasks. (Note: This article about TensorFlow is also available as a free PDF download.)

This cheat sheet is an easy way to get up to speed on TensorFlow. We'll update this guide periodically when news and updates about TensorFlow are released.

SEE: How to build a successful developer career (free PDF) (TechRepublic)

Executive summary

- What is TensorFlow? Google has the single greatest machine learning infrastructure in the world, and with TensorFlow, Google now has the ability to share that. TensorFlow is an open source library of tools that enable software developers to apply deep learning to their products.

- Why does TensorFlow matter? AI has become crucial to the evolution of how users interact with services and devices. Having such a powerful set of libraries available can enable developers to include this powerful deep learning evolution to their products.

- Who does TensorFlow affect? TensorFlow will have a lasting effect on developers and users. Since the library was made open source, it is available to all developers, which means their products can be significantly enhanced to bring a higher level of intelligence and accuracy to their products.

- When was TensorFlow released? TensorFlow was originally released November 9, 2015, and the stable release was made available on February 15, 2017. Google has now released TensorFlow 2.4, which includes a number of new features and profiler tools.

- How do I start using TensorFlow? Developers can download the source code from the TensorFlow GitHub repository. Users are already seeing its effects in the Google ecosystem.

SEE: How to implement AI and machine learning (ZDNet special feature) | Download the free PDF version (TechRepublic)

What is TensorFlow?

When you have a photo of the Eiffel Tower, Google Photos can identify the image. This is possible thanks to deep learning and developments like TensorFlow. Prior to TensorFlow there was a division between the researchers of machine learning and those developing real products; that division made it challenging for developers to include deep learning in their software. With TensorFlow, that division is gone.

TensorFlow delivers a set of modules (providing for both Python and C/C++ APIs) that enable constructing and executing TensorFlow computations, which are then expressed in stateful data flow graphs. These graphs make it possible for applications like Google Photos to become incredibly accurate at recognizing locations in images based on popular landmarks.

SEE: All of TechRepublic's cheat sheets and smart person's guides

Machine Learning Cheat Sheet Pdf

In 2011, Google developed a product called DistBelief that worked on the positive reinforcement model. The machine would be given a picture of a cat and asked if it was a picture of a cat. If the machine guessed correctly, it was told so. An incorrect guess would lead to an adjustment so that it could better recognize the image.

TensorFlow improves on this concept by sorting through layers of data called Nodes. Diving deeper into the layers would allow for more and complex questions about an image. For example, a first-layer question might simply require the machine to recognize a round shape. In deeper layers, the machine might be asked to recognize a cat's eye. The flow process (from input, through the layers of data, to output) is called a tensor..hence the name TensorFlow.

What is TensorFlow 2.0?

Google is in the process of rolling out TensorFlow 2.0, which includes the following improvements:

- Helps make API components integrate better with tf.keras (a high-level interface for neural networks that runs on top of multiple backends).

- Includes TensorFlow.js version 1.0, which allows the use of off-the-shelf JavaScript models, can retrain existing JS models, and enables the building and training of models directly in JavaScript.

- Includes TensorFlow Federated, which is an open source framework for experimenting with machine learning (and other computations) using decentralized data.

- Includes TF Privacy, a library for training machine learning models with a focus on privacy for training data.

- Features eager execution, which is an imperative programming environment that evaluates operations immediately, without building graphs before returning concrete values.

- Uses tf.function, which allows you to transform a subset of Python syntax into portable, high-performance graphs, and improves performance and deployability of eager execution.

- Advanced experimentation will be made possible with new extensions Ragged Tensors (the TensorFlow equivalent of nested variable-length lists), TensorFlow Probability (a Python library built on TensorFlow that makes it easy to combine probabilistic models and deep learning), and Tensor2Tensor (a library of deep learning models and datasets).

- A conversion tool that automatically updates TensorFlow 1.x Python code so that it can be used with TensorFlow 2.0 compatible APIs (and flags cases where said code cannot be automatically converted).

SEE: Free machine learning courses from Google, Amazon, and Microsoft: What do they offer? (TechRepublic Premium)

Why does TensorFlow matter?

Machine learning is the secret sauce for tomorrow's innovation. Machine learning, also called deep learning, is considered a class of algorithms that:

- Use many layers of nonlinear processing units for feature extraction and transformation; and

- are based on the learning of multiple levels of features or representations of the data; and

- learn multiple levels of representation corresponding to different levels of abstraction.

More about Open Source

Thanks to machine learning, software and devices continue to become smarter. With today's demanding consumers and the rise of big data, this evolution has become tantamount to the success of a developer and their product. And because TensorFlow was made open source, it means anyone can make use of this incredible leap forward brought to life by Google. In fact, TensorFlow is the first serious framework for deep learning to be made available through the Apache 2.0 license.

With developers and companies able to use the TensorFlow libraries, more and more applications and devices will become smarter, faster, and more reliable. TensorFlow will be able to sort through vast numbers of images at an unprecedented rate. Drivers usbdtt sound cards & media devices.

Because Google made TensorFlow open source, the libraries can be both improved upon and expanded into other languages such as Java, Lua, and R. This move brings machine learning (something heretofore only available to research institutes) to every developer, so they can teach their systems and software to recognize images or translate speech. That's big.

Who does TensorFlow affect?

TensorFlow not only makes it possible for developers to include the spoils of deep learning into their products, but it makes devices and software significantly more intelligent and easier to use. In our modern, mobile, and 24/7 connected world, that means everyone is affected. Software designers, developers, small businesses, enterprises, and consumers are all affected by the end result of deep learning. The fact that Google created a software library that dramatically improves deep learning is a big win for all.

SEE: Research: Companies lack skills to implement and support AI and machine learning (TechRepublic Premium)

When was TensorFlow released?

TensorFlow was originally released November 9, 2015, and the stable release was made available on February 15, 2017. TensorFlow 2.0 alpha is available now, with the public preview coming soon. You can learn more about the TensorFlow 2.0 alpha in the official Get Started with TensorFlow guide.

The libraries, APIs, and development guides are available now, so developers can begin to include TensorFlow into their products. Users are already seeing the results of TensorFlow in the likes of Google Photos, Gmail, Google Search, Google Assistant, and more.

SEE: Git guide for IT pros (free PDF) (TechRepublic)

What new features are found in TensorFlow 2.4?

Among the new features found in the latest release of TensorFlow include: Thermo fisher scientific port devices driver download for windows 10.

- The tf.distribute module now includes experimental support for asynchronous training models with ParameterServerStrategy and custom training loops. In order to get started with this strategy, read through this Parameter Server Training tutorial, which demonstrates how to setup ParameterServerStrategy.

MultiWorkerMirroredStrategy is now a part of the stable API and implements distributed training with synchronous data parallelism.

The Karas mixed precision API is now part of the stable API and allows for the user of 16-bit and 32-bit floating point types.

The tf.keras.optimizers.Optimizer has been refactors, enabling the user of model.fit or custom training loops to write code that will work with any optimizer.

The experimental support of a NumPy API subset, tf.experimental.numpy, has been introduced which enables developers to run TensorFlow accelerated NumPy code.

New profiler tools have been added so developers can measure the training performance and resource consumption of TensorFlow models.

TensorFlow now runs with CUDA 11 and cuDNN 8, which enables support for NVIDIA Ampere GPU architecture.

Competitors to TensorFlow

TensorFlow isn't alone in the deep learning field; in fact, there are a number of other companies with machine learning frameworks, including the following.

SEE: Linux service control commands (TechRepublic Premium)

How do I start using TensorFlow?

The first thing any developer should do is read the TensorFlow Getting Started guide, which includes a TensorFlow Core Tutorial. If you're new to machine learning, make sure to check out the following guides:

Developers can install TensorFlow on Linux, Mac, and Windows (or even install from source), or check out their various tools from the official TensorFlow GitHub page.

Finally, developers can take advantage of all the TensorFlow guides:

Also see

- IT leader's guide to deep learning (TechRepublic Premium)

- Building the bionic brain (free PDF) (TechRepublic)

- Hiring Kit: Autonomous Systems Engineer (TechRepublic Premium)

- What is AI? Everything you need to know about Artificial Intelligence (ZDNet)

- Artificial Intelligence: More must-read coverage (TechRepublic on Flipboard)

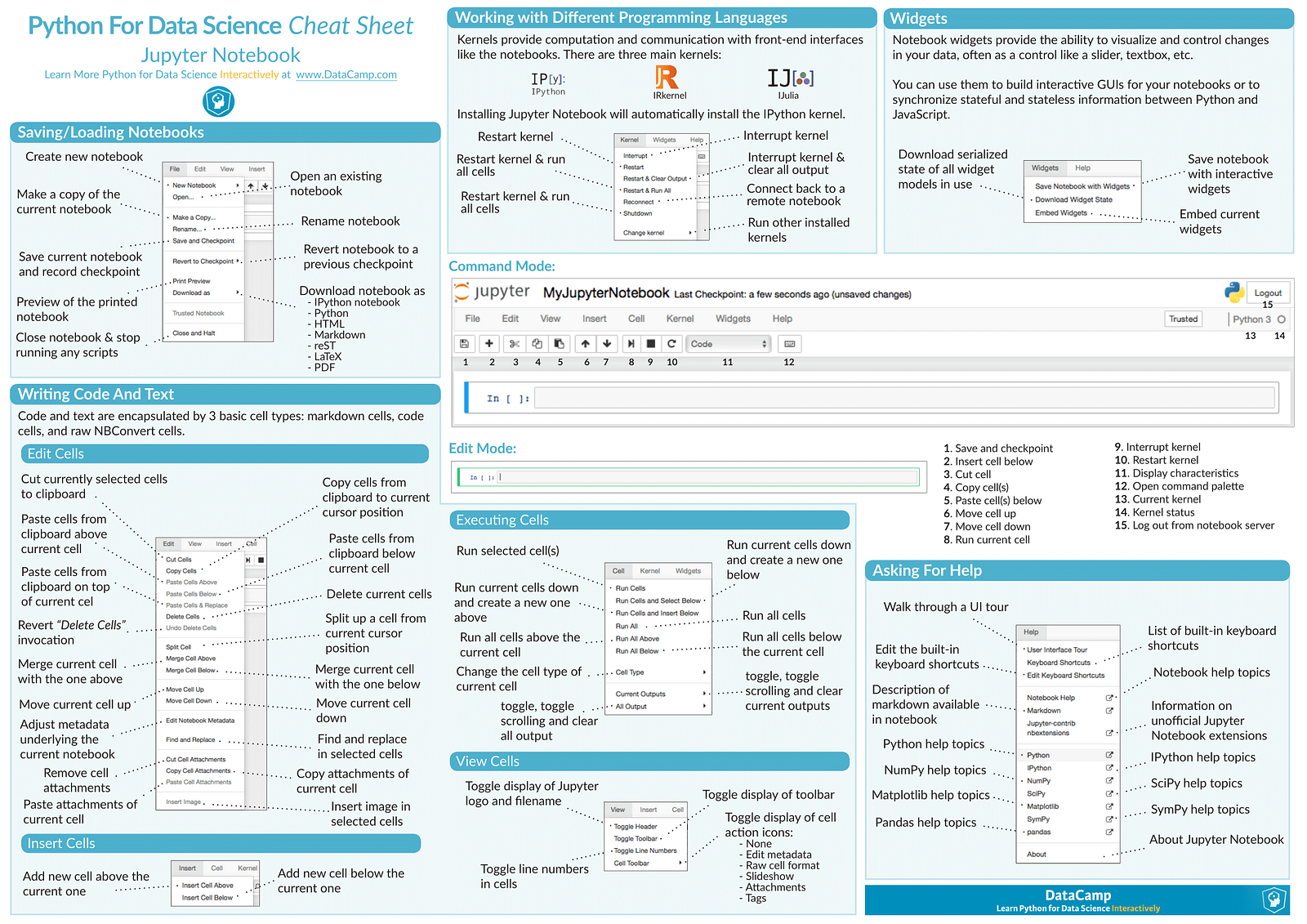

By Karlijn Willems, DataCamp.

Deep Learning With Python

Deep learning is a very exciting subfield of machine learning that is a set of algorithms, inspired by the structure and function of the brain. These algorithms are usually called Artificial Neural Networks (ANN). Deep learning is one of the hottest fields in data science with many case studies with marvelous results in robotics, image recognition and Artificial Intelligence (AI).

This undoubtedly sounds very exciting (and it is!), but it is definitely one of the more complex topics in data science to get into. If you have prior machine learning experience, though, you should be getting started with deep learning pretty easily, as you will have already proven that you have understood, practiced and assimilated the necessary mathematics, statistics and machine learning basics. Maybe you have already worked on machine learning projects or you have even participated in a Kaggle or DrivenData competition!

However, even with this prior experience, you’ll still find that this complex topic is interestingly challenging! This doesn’t need to mean that you shouldn’t dive in any code straight away - You can also get a high-level idea how deep learning techniques work by using, for example, the Keras package. This package is ideal for beginners, as it offers you a high-level neural networks API with which you can develop and evaluate deep learning models easily and quickly.

Nevertheless, doubts may always arise and when they do, take a look at DataCamp’s, Keras tutorial or download the cheat sheet for free!

In what follows, we’ll dive deeper into the structure and the contents of the cheat sheet.

Keras Cheat Sheet

Starting with Keras is not too hard if you take into account that there are some steps that you need to go through: gathering your data, preprocessing it, constructing your model, compiling and fitting your model, evaluating the model’s performance, making predictions and fine-tuning the model.

This might seem quite abstract. Let’s take a quick look at an example.

A very basic example in which the Keras library is used is to make a simple neural network with just one input and one output layer. To be able to build up your model, you need to import two modules from the Keras package: Sequential and Dense.

Next, you need some data. This example makes use of the random module of NumPy, the fundamental package for scientific computing in Python, to quickly generate some data and labels for you. 3m microtouch ex hid sensor driver download. That’s why you also import the numpy package with the conventional alias np. With the functions from the random module, you’ll first construct an array with size (1000,100). Next, you’ll also construct a labels array that consists of zeroes and ones and is of size (1000,1).

With the data at hand, you can start constructing your neural network architecture. A quick way to get started is to use the Keras Sequential model: it’s a linear stack of layers. You can easily create the model by passing a list of layer instances to the constructor, which you set up by running model = Sequential(). After that, you first add an input layer to the model with the add() function. You pick a dense or fully connected layer, where you indicate that you’re dealing with an input layer by using the argument input_dim. You also use one of the most common activation functions here -relu- and you pick 32 units for the input layer of your model. Next, you also add another dense layer as an output layer. It’s of size 1 with a sigmoid activation function to calculate the probabilities.

With the model built up, you can compile it with the help of the compile() function. you configure the model with the rmsprop optimizer and the binary_crossentropy loss function. Additionally, you can also monitor the accuracy during the training by passing ['accuracy'] to the metrics argument.

Next, you fit the model to the data with fit(): you pass in the data, the labels, set the number of epochs and the batch size. Lastly, you can finally start making predictions with the help of the predict() function. Just pass in the data!

Datacamp Cheat Sheets

Simple enough, right? Let’s take a look at all these steps in more detail.

Data

As you might have gathered from the short example that was just covered in the first section, your data needs to be stored as a NumPy array or as a list of NumPy arrays in order to get started. Also, ideally, you split the data into training and test sets, which is something that was neglected in the example above. In such cases, you can resort to the train_test_split() function which you can find in the cross_validation module of Scikit-Learn, the library for machine learning in Python.

If you want to work with the data sets that come with the Keras library, you can easily do so by importing them from the datasets module. You can use the load_data() functions to get the data split in training and test sets, into your workspace. Alternatively, you can also use the urllib library and its request module to open and read URLs.

Preprocessing

Now that you have the data, you can easily proceed to preprocessing it. Of course, depending on your data, you’ll need to resort to different functions to make sure that the data looks exactly the way it needs to look to pass it to the neural network model.

For example, you can use sequence padding with pad_sequences() to ensure that all sequences in a list have the same length, or you can use one-hot encoding with to_categorical() to generate one boolean column for each categorical feature. These functions come with the Keras library.

However, as mentioned before, you will most probably also need to resort to other libraries for preprocessing - Think of the train and test set splits, or the standardization/normalization functions that come with the Scikit-Learn library. If you’d like to know more, take a look at the scikit-learn documentation or DataCamp’s scikit-learn cheat sheet.

Model Architecture

With your preprocessed data, you can start making your model. As you saw in the basic example above, you first start off by using the Sequential model. Then, you can get down to the real work and add layers to your model!

Sequential Model

Import Sequential from keras.models and initialize your model by assigning the Sequential() constructor to model. For this cheat sheet, we’ll be working with three examples of models: the Multilayer Perceptron (MLP) for binary and multi-class classification and regression, the Convolutional Neural Network (CNN) and the Recurrent Neural Network (RNN).

Multilayer Perceptron (MLP)

Networks of perceptrons are multi-layer perceptrons, which are also known as “feed-forward neural networks”. As you sort of guessed, these are more complex networks than the perceptron, as they consist of multiple neurons that are organized in layers. The number of layers is usually limited to two or three, but theoretically, there is no limit!

Binary Classification

First up is the MLP model for binary classification. In this case, you’ll make a model to correctly predict whether Pima indians have an onset of diabetes within five years or not.

Keras Cheat Sheet Pdf

To do this, you first import Dense from keras.layers and you can get started with building up your neural network architecture. Just like in the example that was given at the start of this post, you first need to make an input layer. Since the model needs to know what input shape to expect, you’ll always find the input_shape, input_dim, input_length, or batch_size arguments in the input layer.

Multi-Class Classification

Next up, you also build a multi-class classification model for the MNIST data set to correctly recognize handwritten digits. In this model, you’ll not only use Dense layers, but also Dropout layers. The function of the dropout layers is to ignore randomly selected neurons during training, thus reducing the chances of overfitting.

As you saw in the first model, you also pass the input_shape for the input layer and you also fill in the activation argument for all Dense layers. You set the dropout rate at 0.2 for the Dropout layers.

Regression

A classic data set for regression is the Boston housing data set. In this case, you build a simple model with just an input and an output layer. Once again, the Dense layer is used, to which you pass the units, the activation function and the input dimensions. In the output layer, you specify that you want to have one unit back.

Convolutional Neural Network (CNN)

A convolutional Neural Network is a type of deep, feed-forward artificial neural network that has successfully been applied to analyzing visual imagery. In this case, the neural network model that is built in the cheat sheet for the CIFAR10 data set, which is well known and used for object recognition.

In this case, you see that there are some other modules that are imported in order to build your CNN model - Activation, Conv2D, MaxPooling2D, and Flatten. These types of layers, in combination with the ones that you have already seen, will be combined in such a way that you can classify the CIFAR10 images.

Note that you can find the complete example back in the examples folder of the Keras repository.

Keras Cheat Sheet Pdf

Recurrent Neural Network (RNN)

A Recurrent Neural Network is the last type of network that is included in the cheat sheet: it’s a popular model that has shown good results in NLP tasks. They’re not really like feed-forward networks, : the network is one where connections between units form a directed cycle. For this cheat sheet, the model that was included is one for the IMDB data set. The task is sentiment classification.

Keras Cheat Sheet 2020

This last example uses the Embedding and LSTM layers; With the Embedding layer, you can map each movie review into a real vector domain. You can then pass in the output of the Embedding layer straight in the LSTM layer. Lastly, make sure to add an output layer with only 1 unit and an activation function (in this case, the sigmoid activation function is used).

Keras Cheat Sheet R

PS. if you want to know more about neural network architectures, definitely check out this mostly complete chart of neural networks. Also, if you’d like to know more on constructing neural network models with Keras, check out DataCamp’s Keras course.